Highlights

- Microsoft engineer discovers a flaw in OpenAI’s DALL-E 3, enabling inappropriate image generation.

- OpenAI responds swiftly with a fix, while Microsoft acknowledges the issue.

- The incident prompted discussions on AI ethics, highlighting the ongoing challenges in securing advanced AI systems.

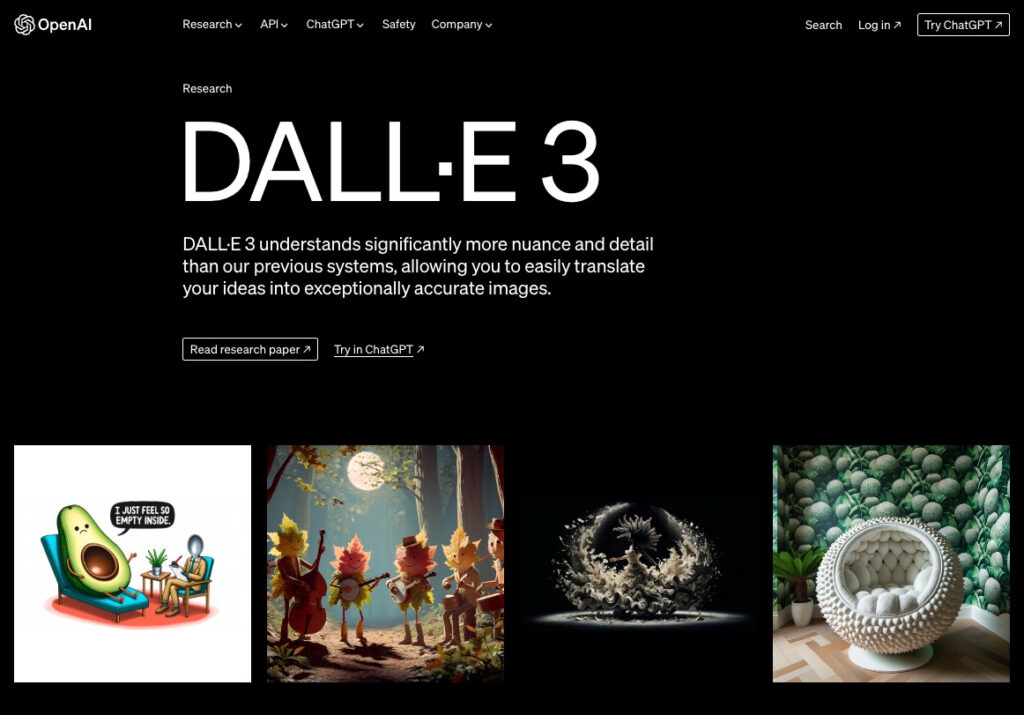

Shane Jones, a software engineering manager at Microsoft, recently disclosed a critical vulnerability in OpenAI’s DALL-E 3 picture production model, which allows users to bypass safety checks and generate unsuitable images.

This discovery raises ethical concerns and highlights the ongoing problems of creating secure and responsible AI systems.

Microsoft Finds Loophole in OpenAI’s DALL-E 3

Jones discovered the vulnerability independently in December 2023 and initially reported it to Microsoft. Jones was advised to contact OpenAI directly, but when he received no answer.

He escalated his concerns to US Senators, Representatives, and the Washington State Attorney General. His statement stressed the potential for the creation of violent and damaging imagery.

To prevent exploitation, the nature of the vulnerability has not been revealed, however, reports claim it includes manipulating prompts or exploiting certain keywords to circumvent safety guardrails.

The potential impact is considerable, with ethical problems and legal ramifications around the ability to generate harmful content.

Both Microsoft and OpenAI have acknowledged the issue. OpenAI responded quickly, delivering a remedy within 24 hours of notification.

They expressed their commitment to safety and continued efforts to improve the security of their models. Microsoft acknowledged Jones’ report but did not elaborate on the details of internal conversations.

Recent developments in the case include Jones’ assertion that Microsoft first tried to silence his concerns, pushing him to contact authorities.

However, Microsoft denies this, claiming that they followed established protocols and honored Jones’ right to address the problem elsewhere.

The incident has reignited issues about AI governance, transparency, and responsibility. Experts advocate for improved safety controls, open communication, and collaborative efforts to reduce the risks associated with powerful AI systems.

Looking ahead, the incident highlights the significance of responsible AI development and deployment.

Open communication and collaboration among researchers, developers, politicians, and the general public are thought essential for addressing difficult ethical issues and supporting the safe and useful usage of AI technologies.

Continuous refinement of safety measures, monitoring, and user education will be required to address emerging weaknesses and potential misuse of powerful AI systems. This complete overview is based on publicly accessible data as of February 1, 2024.

Overall, As events progress and additional details emerge, the story may change. For further information, see GeekWire, Microsoft, OpenAI, and The New York Times.

We will continue to closely follow the situation and offer updates as they become available. Please contact us if you have any questions or need additional information.

Directly in Your Inbox