Highlights

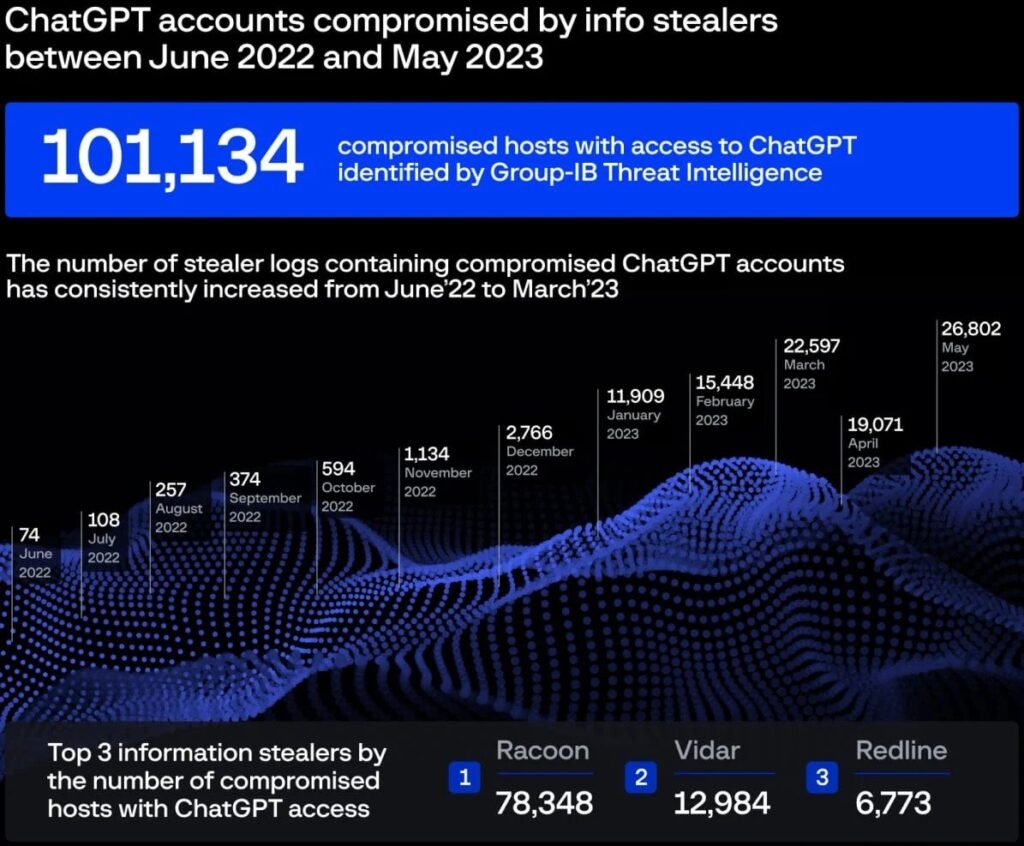

- Security researchers report that they identified more than a hundred thousand information Stealer logs.

- Researchers noted that several of the underground websites contain CHATGPT accounts.

- As per the security firm, more than 101K Chat GPT user’s accounts have been stolen by the info-stealing malware.

Cybersecurity researchers at Group IB reported that they had spotted more than a hundred thousand information Stealer logs on several underground websites containing Chat GPT accounts.

For those unaware, information-stealing malware is malware that targets account data stored in applications such as Web browsers, email clients, gaming services, cryptocurrencies & more.

These kinds of malware steal data stored in web browsers by extracting them from the program’s SQL data and abusing the CtyptProtectData function to reserve encryption of stored secrets.

After that, these stolen data & other things are then packaged into archives that are known as logs and are sent back to the threat actor’s server for retrieval.

As per the dark web marketplace, more than 101K Chat GPT users’ accounts have been stolen by the information-stealing malware, and the peak was observed in May 2023 as the threat actors shared 26,800 new CHATGPT new user’s info.

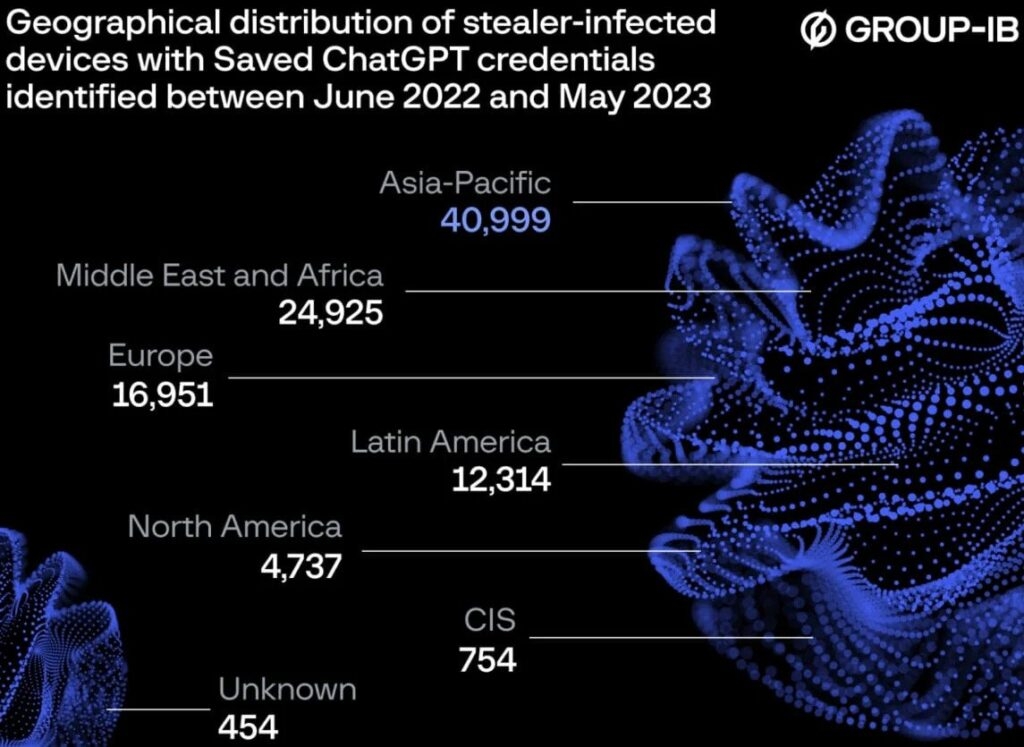

Now, coming to the targeted regions, Asia Pacific is the most affected between June 2022 & May 2023, followed by the Middle East & Africa, Europe, and more.

Read: Russian Hacker Group Shuckworm Still Targeting Ukraine Security Orgs

As CHATGPT allows users to store their conversations, getting access to an account means insights into exclusive information, personal communications, software codes & more

CHATGPT accounts, cryptocurrency wallet information, credit card data, and other conventionally targeted data types mark the rise of the importance of Ai powered tools for users and businesses.

Many Enterprises are integrating CHATGPT into their workflow. Employees enter classified correspondence or use bots to optimize priority codes. Given that CHATGPT’s standard configuration retains all conversation, this could inadvertently offer a trove of sensitive intelligence to treat actors if obtain account credentials.

It is because of these concerns that the technology giants like Samsung have banned employees from using the CHATGPT on work machines, and the company has gone as far as threatening to terminate employees who fail to follow this policy.

According to cybersecurity intelligence firm’s data, the number of stolen CHATGPT logs has increased continuously over time, with about majority of it coming from the Racoon info stealer followed by Vidar and RedLine

Cybersecurity researchers advise that if users give crucial data in CHATGPT, consider disabling the save chat option in CHATGPT settings or delete the chats once users are done using the Ai tool.

Although, it is noted that numerous information-stealing malware take screenshots of the compromised machines or carry out keylogging, so even if the victim does not save the conversation to the account, the malware infection can still lead to the data leak

Sadly, CHATGPT has already got its data breached, as the users were able to see other users’ personal information & chats. Users should not be inputting any kind of crucial information on the Ai based platforms.

Read: Royal Ransomware Uses the Blacksuit Encryptor to Attack Enterprises

Directly in Your Inbox